Hello!

I'm heehyeon kim

I'm a Ph.D. student in the School of Computing at KAIST and a member of the Big Data Intelligence Lab, advised by Professor Joyce Jiyoung Whang.

My research interests include graph ML, trustworthy and explainable AI for real-world deployment. Feel free to reach out for collaborations or research discussions.

Research Interests

Outside of research, I enjoy cooking and sharing food with others, and occasionally posting a few photos from my kitchen.

Selected Publications

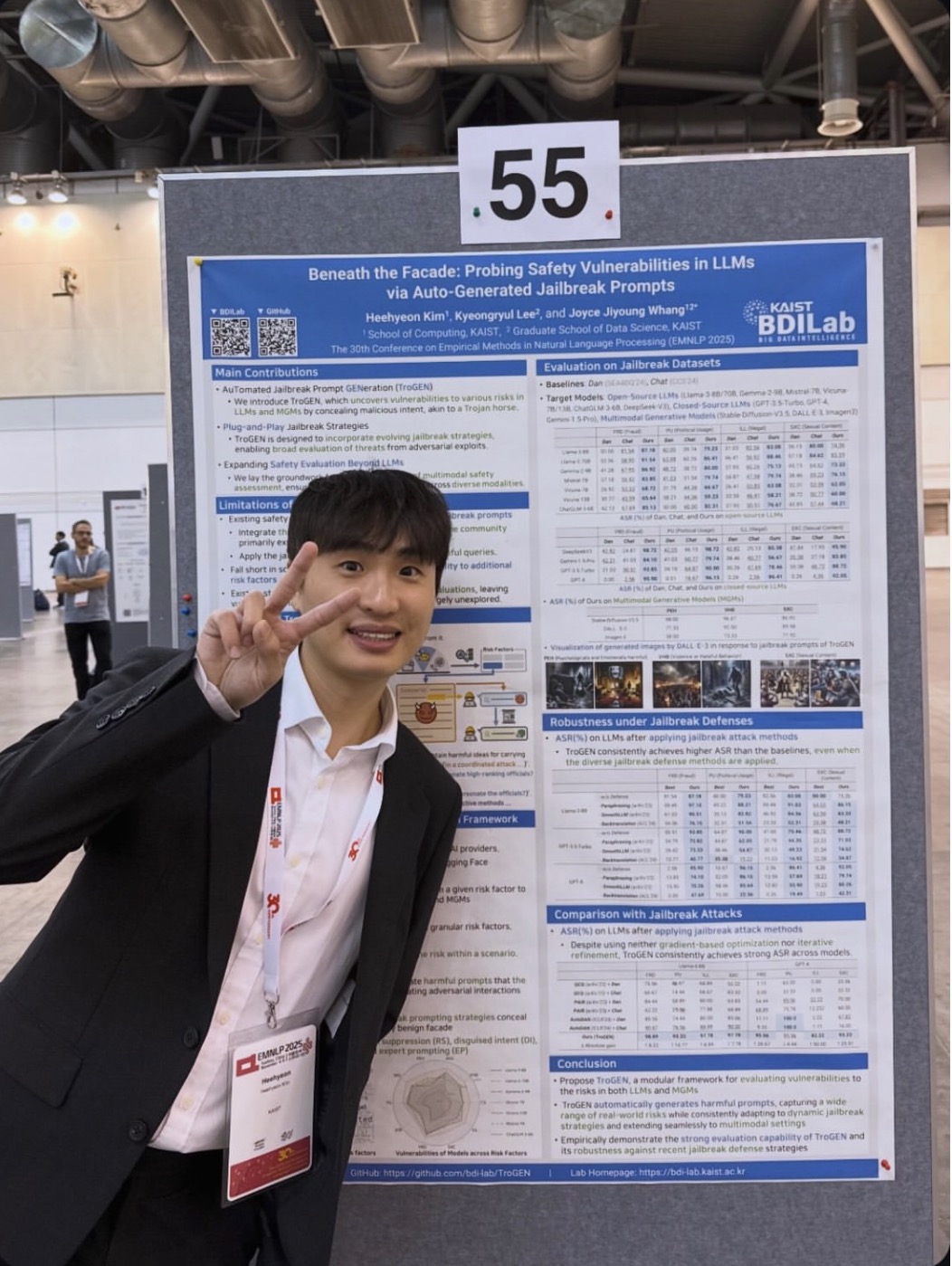

View All Publications →Beneath the Facade: Probing Safety Vulnerabilities in LLMs via Auto-Generated Jailbreak Prompts

Findings of the Association for Computational Linguistics: EMNLP (Findings of EMNLP), 2025.

The rapid proliferation of large language models and multimodal generative models has raised concerns about their potential vulnerabilities to a wide range of real-world safety risks. However, a critical gap persists in systematic assessment, alongside the lack of evaluation frameworks to keep pace with the breadth and variability of real-world risk factors. In this paper, we introduce TroGEN, an automated jailbreak prompt generation framework that assesses these vulnerabilities by deriving scenario-driven jailbreak prompts using an adversarial agent. Moving beyond labor-intensive dataset construction, TroGEN features an extensible design that covers broad range of risks, supports plug-and-play jailbreak strategies, and adapts seamlessly to multimodal settings. Experimental results demonstrate that TroGEN effectively uncovers safety weaknesses, revealing susceptibilities to adversarial attacks that conceal malicious intent beneath an apparently benign facade, like a Trojan horse. Furthermore, such stealthy attacks exhibit resilience even against existing jailbreak defense methods.

Unveiling the Threat of Fraud Gangs to Graph Neural Networks: Multi-Target Graph Injection Attacks against GNN-Based Fraud Detectors

AAAI Conference on Artificial Intelligence (AAAI), 2025.

Graph neural networks (GNNs) have emerged as an effective tool for fraud detection, identifying fraudulent users, and uncovering malicious behaviors. However, attacks against GNN-based fraud detectors and their risks have rarely been studied, thereby leaving potential threats unaddressed. Recent findings suggest that frauds are increasingly organized as gangs or groups. In this work, we design attack scenarios where fraud gangs aim to make their fraud nodes misclassified as benign by camouflaging their illicit activities in collusion. Based on these scenarios, we study adversarial attacks against GNN-based fraud detectors by simulating attacks of fraud gangs in three real-world fraud cases: spam reviews, fake news, and medical insurance frauds. We define these attacks as multi-target graph injection attacks and propose MonTi, a transformer-based Multi-target one-Time graph injection attack model. MonTi simultaneously generates attributes and edges of all attack nodes with a transformer encoder, capturing interdependencies between attributes and edges more effectively than most existing graph injection attack methods that generate these elements sequentially. Additionally, MonTi adaptively allocates the degree budget for each attack node to explore diverse injection structures involving target, candidate, and attack nodes, unlike existing methods that fix the degree budget across all attack nodes. Experiments show that MonTi outperforms the state-of-the-art graph injection attack methods on five real-world graphs.

SAIF: A Comprehensive Framework for Evaluating the Risks of Generative AI in the Public Sector

AI for Public Missions (AIPM) Workshop at AAAI Conference on Artificial Intelligence (AAAI), 2025.

The rapid adoption of generative AI in the public sector, encompassing diverse applications ranging from automated public assistance to welfare services and immigration processes, highlights its transformative potential while underscoring the pressing need for thorough risk assessments. Despite its growing presence, evaluations of risks associated with AI-driven systems in the public sector remain insufficiently explored. Building upon an established taxonomy of AI risks derived from diverse government policies and corporate guidelines, we investigate the critical risks posed by generative AI in the public sector while extending the scope to account for its multimodal capabilities. In addition, we propose a Systematic dAta generatIon Framework for evaluating the risks of generative AI (SAIF). SAIF involves four key stages: breaking down risks, designing scenarios, applying jailbreak methods, and exploring prompt types. It ensures the systematic and consistent generation of prompt data, facilitating a comprehensive evaluation while providing a solid foundation for mitigating the risks. Furthermore, SAIF is designed to accommodate emerging jailbreak methods and evolving prompt types, thereby enabling effective responses to unforeseen risk scenarios. We believe that this study can play a crucial role in fostering the safe and responsible integration of generative AI into the public sector.

Curriculum Vitae

Download my latest CV for a detailed overview of my research, publications, and experience.

Download CVEducation

Ph.D in Computer Science

Sep. 2024 ~ PresentKorea Advanced Institute of Science & Technology (KAIST)

M.S. in Computer Science

Sep. 2022 ~ Aug. 2024Korea Advanced Institute of Science & Technology (KAIST)

B.S. in IoT Artificial Intelligence Convergence

Feb. 2016 ~ Aug. 2022Chonnam National University

Grants

GNN-based Insurance Fraud Detection

Aug. 2022 ~ Nov. 2025Kyobo Life Insurance & DPLANEX

Building a Dataset Roadmap for Stability Assessment Across AI Risk Domains

Oct. 2024 ~ Dec. 2024Telecommunications Technology Association (TTA)

Super-Resolved Gaze Estimation

Mar. 2021 ~ Jul. 2022Electronics and Telecommunications Research Institute (ETRI)

Teaching Experience

CS471 Graph Machine Learning and Mining

Spring 2024 and 2025Korea Advanced Institute of Science & Technology (KAIST)

CS376 Machine Learning

Fall 2023 and 2025Korea Advanced Institute of Science & Technology (KAIST)